Large Language Models (LLMs) are becoming more and more common parts of modern applications and systems. As they grow more complex, the advantage of using standardized tools and structures for recurring tasks become more apparent. After all, you either use a framework or create your own. This is where projects such as LangChain come to the aid.

LangChain is an open-source framework designed to simplify the development of LLM-powered applications. It makes building complex LLM-enabled applications easier by offering ready-to-use integrations with 3rd party services and external data sources, prompt templates for different use cases, and a number of other useful tools. Additionally, LangChain allows combining different LLMs for specialized tasks easily. Currently, LangChain is available for Python and TypeScript.

LangChain is built around concepts like chains, tools, and agents. Plus, it provides simple ways to add memory to your app, so it can remember context across different sessions.

As the name suggests, chains are central to LangChain. A chain consists of two or more runnables “chained” together using a pipe operator | or a .pipe() method. Here, a “runnable” reffers to any unit of work that implements a Runnable protocol. It can be anything from a simple function to a call to LLM.

For example, if we want to build a chain that looks up certain information on the web, transforms it to a specific format, and stores it in a database, we can build it as follows:

class SearchDetails(BaseModel):

query: str

results: Optional[str]

def search(query: str) -> str:

...

def store(data: SearchDetails):

...

llm = ChatOpenAI()

parser = PydanticOutputParser(pydantic_object=SearchDetails)

prompt = PromptTemplate(

template="Format search results with the provided format."

"Search results: {results}."

"Format: {format_instructions}.",

input_variables=["results"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

chain = RunnableLambda(search) | prompt | llm | parser | RunnableLambda(store)

chain.invoke("What is LangChain?")Tools are components that enhance the functionality of LLMs. For instance, they can perform math operations, calling external APIs, interpret code, and more. LangChain offers a number of pre-built tools, but you can easily create a custom tool by annotating a function with the @tool annotation.

We can convert our search function into a tool and bind it to an LLM as follows:

@tool

def search(query: str) -> str:

...

llm = ChatOpenAI()

llm.bind_tools([search])Now, the LLM may decide to call this tool based on the current context. We can help the model choose the correct tool by providing it with a proper name and a description given as a well-written Python docstring, as well as well-named and described parameters.

Agents are high-level components that combine LLMs and tools to work autonomously in completing tasks. They can also collaborate with or be coordinated by another agent. Agents are especially useful in complex systems where each agent is tailored to perform specific tasks. This specialization can enhance reliability, as fewer responsibilities and tools reduce the likelihood of confusion and errors for an LLM.

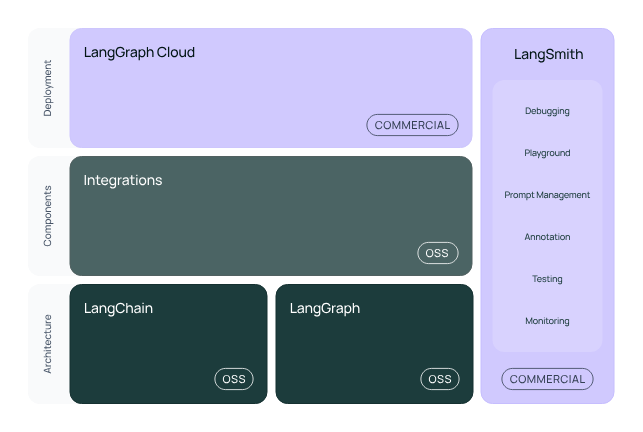

While LangChain is open-source and most of the tools built around it are free to use, several commercial products are also available:

LangServe is a tool that helps add APIs to LangChain applications. It includes a CLI that allows you to create a simple app and manage external templates. The standard setup relies on FastAPI, Uvicorn, Pydantic, and Poetry.

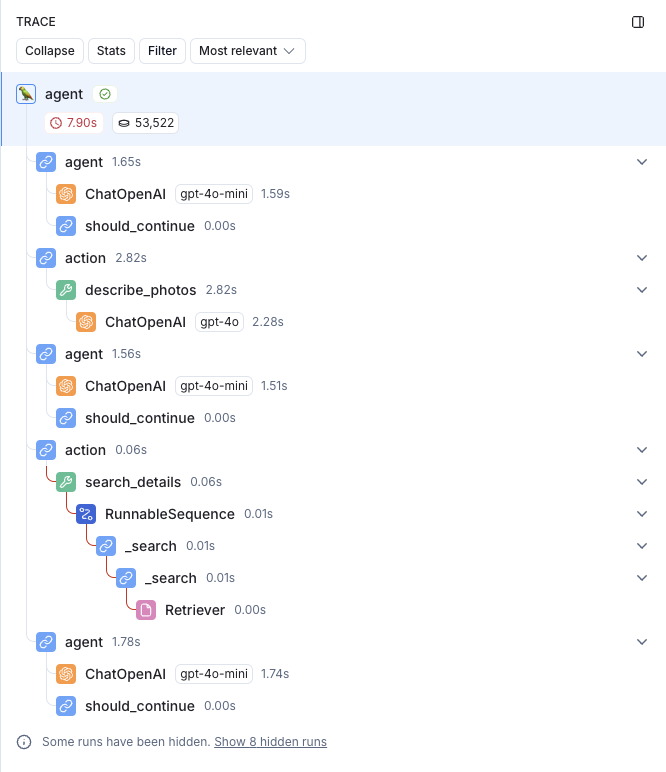

LangSmith is a development platform with tools for debugging, testing, and monitoring applications. It allows you to examine the internal workings of a LangChain application, including the prompts used, the tools called, and their interactions. This is particularly useful when combined with frameworks like ReAct, where the LLM generates reasoning and task-specific actions continuously.

Additionally, LangSmith can help you understand the time and tokens spent on the entire task and individual steps. LangSmith is free while your app is in development, but it requires a subscription for production usage.

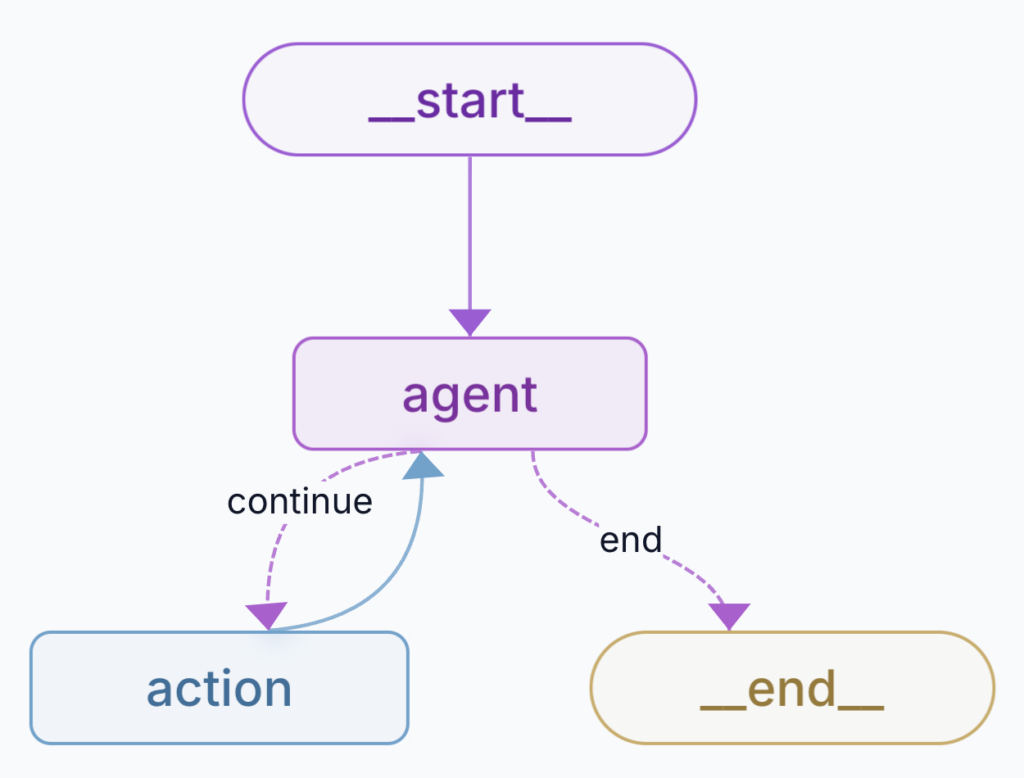

LangGraph is an open-source library built on top of LangChain that allows the creation of stateful LLM applications with multiple agents, tools, logical loops, persistence, and human-in-the-loop interaction. It is defined by nodes, edges, and the state passed between nodes as they are executed.

Additionally, there is LangGraph Studio — an IDE that simplifies the development and debugging of LangGraph applications. It allows you to interact with the app more easily and see what is called and when, similar to LangSmith, but locally. You can also fork at any execution step and re-run it with altered input or parameters.

The application can be easily deployed to LangGraph Cloud directly from the IDE. This is a paid service available to subscription users. While this is the most convenient way to deploy the app, it is not mandatory. There are manual ways to deploy a LangGraph application as well. For instance, you can use the template repository available at agent-service-toolkit to add a simple REST API service, along with a basic UI that interacts with this API. This repository can serve as a great starting point for further enhancements.

LangChain simplifies the process of building LLM-powered applications with its chains, tools, and agents. It offers essential resources for development, debugging, and monitoring, while providing the flexibility to use your own solutions.

Check out our newsletter